Will a Graphics Card speed up my editing? What about an external one?

Introduction

To the most part, I’ve been satisfied with my current Windows notebook, which I bought about 18 months ago. It is based on an Intel reference design with a good cooling system, features an i7-1165 G7 CPU with an integrated IRIS Xe graphics processing unit (GPU), has 16 gigabytes of RAM, a fast 2TB SSD, and a ThunderBolt 3 connection to the docking station that connects my monitors and other periphery. Not a leading-edge unit anymore, but still one delivering pretty solid performance.

Yet, over the past few months, it felt that my notebook became slower and slower. This had nothing to do with its performance deteriorating. No, it was merely that my use of the unit became more and more demanding. That’s because of AI.

The ever-increasing use of Artificial Intelligence has huge implications everywhere. While some of its consequences, such as the emergence of AI-generated images or AI-written text, are frequently discussed in the media and elsewhere, one area that matters to me receives far less attention: the adoption of AI techniques in photo editing programs creates greater demands on the hardware they run on.

Not too long ago, Adobe Lightroom and Photoshop were frequently critiqued for not making much use of a system’s graphics processor. Whether your system had a dedicated GPU or not in most cases impacted these program’s image processing time by only a few percentage points, if even that. Now, however, Lightroom received AI denoising and “super resolution” functionality, whereas Photoshop now includes features such as Generative Filling, which relies on AI technology to fill missing areas in photos, even create completely new ones. Similar features were added to other software, not to mention a whole new category of AI-based image generators such as DALL-E 2 or Midjourney.

I’m not a fan of the latter, but I often use AI-based denoising and sharpening, and sometimes functions such as object removal. All of these are demanding from a hardware performance. The more of these I used, the longer my wait times became when processing my photos. Inevitably, I started to look for ways to speed things up. While notebooks with faster processors are available, the difference isn’t big enough to get substantial performance gains. The biggest impact could come from a more performant GPU than the one in my current notebook.

I quickly discarded the idea of buying a new notebook that includes a dedicated graphics card, usually referred to as a ‘gaming notebook’ since that seems to be the primary purpose. These things are big and heavy, their batteries don’t last all that long because GPUs are power-hungry, and they tend to be noisy because of the required cooling fans. For a while, I then thought about buying or building a new PC with a dedicated GPU card, to be used only for editing. However, I travel quite often, so giving up my notebook was and is out of the question. This meant having two systems and needing to synchronize my image collection between them, since I often take pictures ‘on the road’ and edit them while still away from home. Not a pretty option, either.

I finally decided to give an eGPU a try.

If you’re not sure exactly what that is: the ‘e’ stands for external, so an eGPU is a graphics card in a separate enclosure that can be connected to a notebook (or a PC) via a standard ThunderBolt 3 or 4 connection. ThunderBolt in turn is a serial connection that pretty much looks and feels like a standard USB one with USB-C connectors (it is actually downward compatible), but with a higher speed that is fast enough for graphics cards to be used remotely. eGPU enclosures have power supplies strong enough for demanding GPU cards, plus they feature the same card slots PCs have, meaning that most standard graphics cards can be used in such enclosures.

There is a certain performance penalty, though, as the graphics card will perform faster in a PC than when connected via ThunderBolt. Estimates I saw were between 10 and 30 percent less performance. With that in mind, I went for a mid-range graphics card, a Sapphire Pulse AMD Radeon RX6800, and the smallest decent-looking enclosure I could find, an Akitio Node Titan (which is still BIG). Naturally, the key question in my mind was whether the investment would be worth it: would my editing be accelerated enough with this eGPU to make it worth the 800 or so Euros the whole thing cost me?

Setup

My setup may be a bit unusual: I already owned a ThunderBolt docking station, an HP G2 model, which connects all my periphery including two monitors. Since the eGPU serves only for editing purposes, I wanted to be able to keep it disconnected without disrupting my system setup, so I kept the monitor setup unchanged. In other words, no monitor is connected to my eGPU. If your setup is different and you run your monitors through your GPU, the performance hit caused by that should be small, though.

In setting all this up, I hoped that the eGPU I added to the system would not wreak any havoc with it. Turned out it didn’t: my system keeps working exactly as it did before. After plugging in the eGPU’s cable and setting everything up, the only difference is now that I have an additional GPU I can use. Many photo editors allow you to choose which GPU to use (or not), so using them with or without the eGPU connected works pretty seamlessly.

My other worry also turned out to be misplaced: the HP docking station (the small box only partly visible in the image) and the Akitio enclosure (the large box to the left) are both capable of supplying plenty of power to my notebook with their respective USB cables. I half expected conflicts between these two power sources, but both appear to have proper implementations of this feature, such that regardless of which I connect first, that one always supplies the power to the notebook, without the one connected later interfering.

The quite literally biggest issue is the size of the eGPU enclosure. I may be able to hide it under my desk, though. Other than that, the eGPU worked without hiccups so far.

Benchmarks

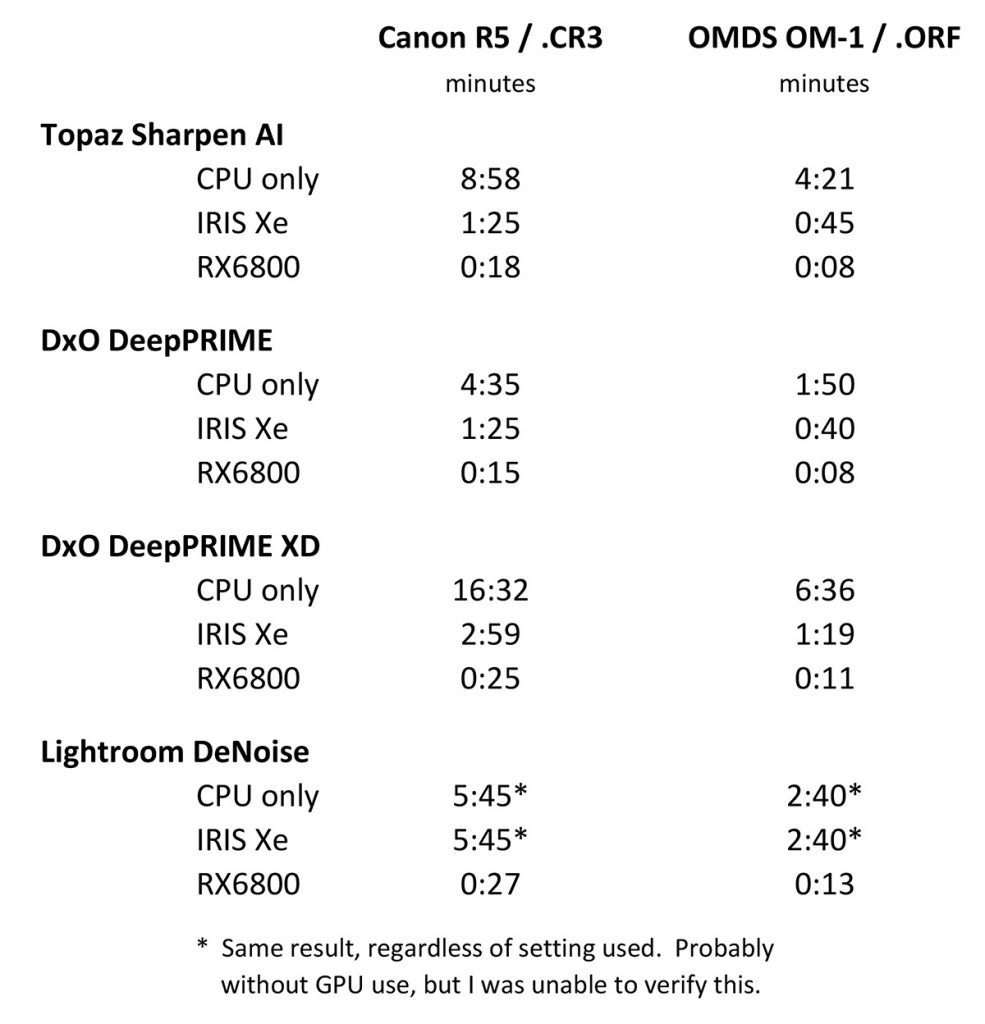

Depending on the task at hand, I use different photo editors and plug-ins. This allowed me to benchmark a few of them. To figure out how much of an impact image sizes have, I used two different RAW test images, one shot with a 45-megapixel Canon R5 (.CR3 file format), and the other with a 20-megapixel OMDS OM-1 (.ORF file format). Both test images are underexposed and, at ISO12,800 respectively ISO16,000, were shot in poor light, meaning they have plenty of noise.

I tested four different operations that typically take the longest when processing images:

- Sharpening and de-noising a RAW image, using Topaz SharpenAI 4.1.0 as a plug-in in Lightroom, set to “Motion Blur – Very Blurry” with values of 60 for Blur and 40 for noise.

- Converting a RAW image to DNG in DxO PhotoLab 6.8, using its DeepPRIME denoising algorithm in its default settings.

- Converting a RAW image to DNG in DxO PhotoLab 6.8, using its DeepPRIME XD denoising algorithm in its default settings.

- Converting a RAW image to DNG in Lightroom 12.4, using its DeNoise algorithm in its default settings.

Furthermore, I conducted each test in three different modes:

- while only using the CPU,

- while using the GPU built into the CPU, and

- while using the external GPU.

The results were as follows:

As was to be expected from the different camera resolutions, processing the 45MP Canon R5 files usually took a bit more than twice as long as it took to process the 20MP files from the OM-1. Per the above table, Topaz SharpenAI ran about five times faster with both file types when using the eGPU. In case of DxO PhotoLab and its two different algorithms that I tested, that factor lay between about 5 and more than 7 times.

As noted at the bottom of the table, Lightroom’s performance when using it for image noise reduction remained unchanged, regardless of whether I told it to use the CPU only or to use the IRIS Xe GPU. I suspect that it simply ignores the integrated GPU and uses only dedicated GPUs. Using the eGPU sped it up dramatically, though. When using the eGPU, both the Canon image and the OMDS one took less than one-twelfth(!) the time.

Conclusions

There can be no doubt that adding a dedicated graphics card to a PC, or externally to a notebook, gives certain photo processing applications a significant performance boost. Keep in mind that many functions, such as brightening, darkening or saving images, may only see marginal improvements, in particular in Photoshop and Lightroom, which at this time still make less use of GPUs than several other programs do. Note, however, that Adobe announced that future versions of their programs, for instance Camera Raw, will become much more demanding from a GPU performance perspective, with some functionality not available at all going forward unless your system has a compatible GPU.

Overall, AI-supported functions tend to benefit greatly from the resulting boost in graphics performance. If you don’t use an eGPU but connect a GPU directly to your PC, expect to see proportionately bigger performance gains.

However, as they say, “your mileage may vary”: how much faster your system will run depends on how fast the added graphics card is, whether it is run through a bottleneck such as the ThunderBolt connection, what kind of software you use, and so on and so forth. Nevertheless, you can expect to see a significant boost in those functions that often take the most time when editing your images.

Lothar Katz

July 2023